Securing Proxmox using ZFS' native encryption

This article was heavily inspired by the article Using Native ZFS Encryption with Proxmox (archive) published on the PrivSec blog and covering this exact topic. That article helped me a lot in figuring out the required steps to encrypt a Proxmox Setup using ZFS native encryption. Still, I found that some of the required background knowledge in the article could use more coverage, and some of the involved steps could do with more explanations.

This article is self-contained, so If you are interested in a detailed write-up then please continue reading. Otherwise, and if a quick read is more your speed, then I would recommend giving the PrivSec article a read first.

Update - 08.02.2026: If you plan to make use of Proxmox’s clustering, using native ZFS encryption and following the instructions below will prevent you from using replication features across your cluster1.

(Thank you KoS for the tip).

Introduction

A few months ago I started experimenting with the Proxmox Virtual Environment (PVE for short). If the title of this article is what brought you here, then you probably know what Proxmox is. If you are new to this, the short of it is that Proxmox is a Linux Operating System (based on Debian) tailored for virtualization. Proxmox acts as a type-1 hypervisor (see What is a hypervisor), and makes it extremely easy to spin up virtual machines and containers alike (though it is more geared towards LXC containers than Docker). For these reasons, Proxmox is extremely popular in the Homelab/hobbyist community.

One thing that I (and many before me) found unsatisfying in the Proxmox installation process, and the configuration options Proxmox makes available, is the lack of an officially supported full-disk encryption option. Looking around the Internet for tutorials on the matter, I did find a couple articles that guided me through how to manually configure “full-disk encryption” on Proxmox. I am purposefully using quotes here, because as it turned out, achieving this goal is not as straight forward as one might assume (hence the length of this article).

Furthermore, an additional aspect I had not thought of when starting my online research, is how unlocking the encrypted volumes would be handled at boot: A desktop or laptop are always within physical range of the user and have a plugged-in keyboard and monitor, which allow to type-in the encryption password necessary to unlock the encrypted data at boot. A Proxmox server, in contrast, is usually running headless somewhere not necessarily easily accessible, for this reason a remote unlock strategy is required.

Why use ZFS native encryption

Proxmox supports ZFS as its native file system, and prompts it as a default during its installation process when multiple disks are detected2. ZFS is a filesystem, and a very advanced one at that. Its list of features include but is not limited to: Transparent compression and encryption, data deduplication, copy-on-write, snapshot support, hierarchical data checksumming, software RAID, etc. Honestly, I don’t have the knowledge nor the experience needed to attempt to explain the awesomeness of ZFS. For that, I would point you towards Level1Linux’s excellent video or ArsTechnica’s great write-up on the topic. Hopefully, if you are reading beyond this point you have a basic understanding of what ZFS is and how it works.

The one relevant aspect here about ZFS is that it pulls double duty, handling both:

- The volume management: by handling the block storage devices (i.e. The physical disks) and organizing them into logical volumes.

- The data management: by handling the files stored on the logical volumes.

It is through this complete control of the storage system, that ZFS can deliver the many features it supports. I mention this here, because this requirement of complete control over the storage system makes it necessary to reconsider some of the usual approaches of doing things. Specifically, when it comes to full-disk encryption, the Linux-way to do it (as far as my knowledge goes) is by using Linux’s dm-crypt backend, to create a LUKS container which encompasses the whole disk. This is usually combined with LVM, which allows to build logical volumes on top of the LUKS container, thus allowing multiple partitions that are protected with a single LUKS key3. Following the same approach in a ZFS context however is a recipe for degraded performance at best, and unrecoverable loss at worst. This is because in such an approach, the encryption layer would go either beneath or atop ZFS, and either options is problematic4:

- Option #1 - Fully encrypting the disks beneath ZFS: This would mean that the disks would need to be decrypted prior to ZFS’s pool import operation. Seeing as ZFS is usually used in configurations making use of multiple disks, this would require each disk be encrypted separately, and would require unlocking all the disks at boot before the ZFS pools could be imported. Furthermore, even if ZFS were used with only one or two disks, ZFS would only have access to the logical LUKS container, and not the physical disk. This would mean that all the fine-tuning ZFS brings to optimizing disk I/O will be for naught. Finally, if something were to go wrong with the LUKS container, none of ZFS’s redundancy measures would be applicable since the ZFS layer would be locked behind the LUKS encrypted layer.

- Option #2 - Using encryption a top ZFS volumes: This is also not an optimal solution, mainly because of ZFS’s use of transparent compression. By default ZFS compresses all data before writing it to disk, this allows to optimize I/O and save on disk space. However, seeing as encrypted data is generally not compressible, ZFS’s compression will be ineffective in such a scenario. Furthermore, using (third-party) disk-encryption atop ZFS only works when using ZFS volumes (

ZVOLs), and is not possible when usingdatasetswhich are what Proxmox uses. However, it is worth mentioning that at thedatasetlevel, one can make use of stacked filesystem encryption solutions, which encrypt logical files instead of logical device blocks (see for example eCryptfs). Opting for such an alternative comes with compromises of course5

Hopefully, the explanations above have made you realize that mixing dm-crypt/LUKS (or any third-party encryption solution) with ZFS is not a good idea. Thankfully, ZFS includes a native encryption feature which allows to create encrypted datasets without the need for any additional software modules.

How to use ZFS native encryption

After installing Proxmox on my host machine, and running it for a couple weeks, the ZFS layout looks as follows:

1

2

3

4

5

6

7

8

9

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 7.44G 892G 104K /rpool

rpool/ROOT 2.59G 892G 192K /rpool/ROOT

rpool/ROOT/pve-1 2.59G 892G 2.42G /

rpool/data 3.83G 892G 200K /rpool/data

rpool/data/subvol-102-disk-0 2.15G 118G 2.10G /rpool/data/subvol-102-disk-0

rpool/data/vm-100-disk-0 1.68G 892G 1.67G -

rpool/var-lib-vz 981M 892G 981M /var/lib/vz

Figure 1: Proxmox’s default ZFS layout

Figure 1: Proxmox’s default ZFS layout

By default Proxmox makes use of a single pool rpool and creates the following datasets:

rpool/ROOTis the dataset used to store the root filesystem used by the OS. The root filesystem is not installed on therpool/ROOTdataset directly, rather on its sub-datasetrpool/ROOT/pve-1(I tried to find out why that is but couldn’t find a definitive answer).rpool/datais used to store disk images of VMs and root directories of containers. In the above example of my system, you can see an example of disk imagerpool/data/vm-100-disk-0and the root directoryrpool/data/subvol-102-disk-0.rpool/var-lib-vzis the default local storage used for ISOs, backups, container templates and other types of data (this is configurable through the Proxmox GUI).

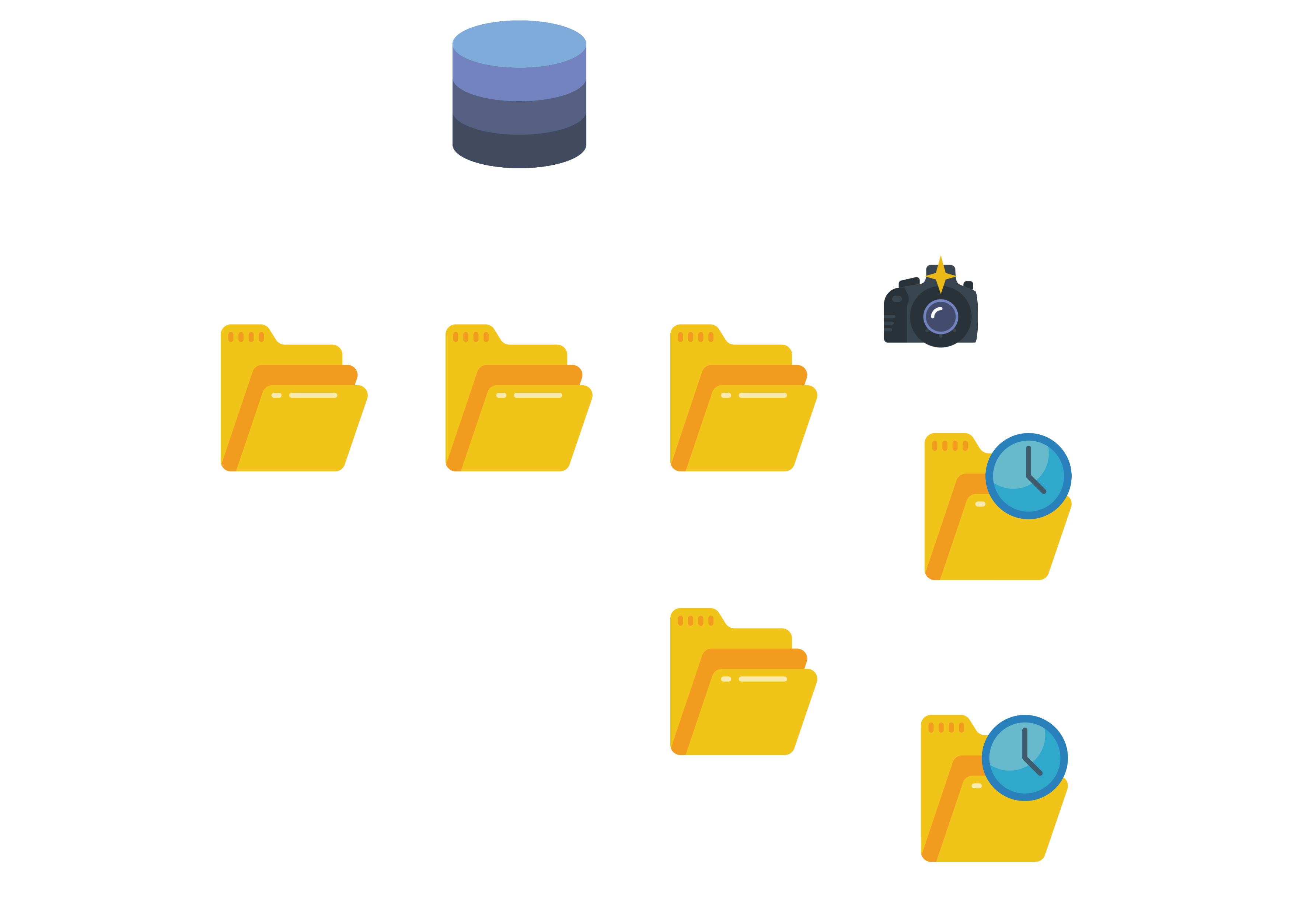

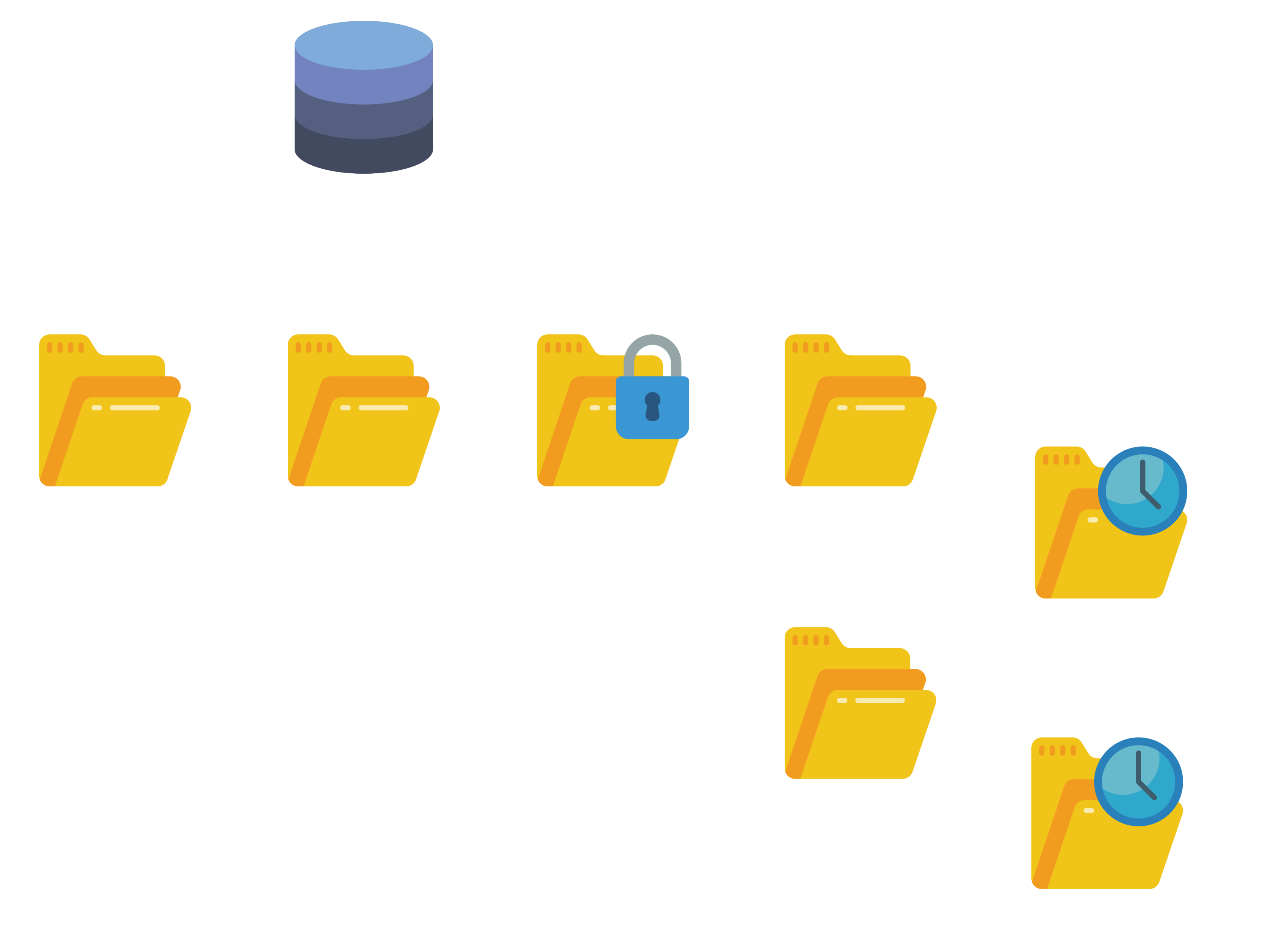

This default dataset layout is illustrated in Figure 1.

By default, none of these datasets are encrypted, you can check for this by using the zfs get encryption command:

1

2

3

4

5

6

7

8

9

# zfs get encryption

NAME PROPERTY VALUE SOURCE

rpool encryption off default

rpool/ROOT encryption off -

rpool/ROOT/pve-1 encryption off -

rpool/data encryption off -

rpool/data/subvol-102-disk-0 encryption off -

rpool/data/vm-100-disk-0 encryption off -

rpool/var-lib-vz encryption off -

Encryption can only be set during the creation of a dataset. For this reason enabling encryption on the datasets above, will require creating new datasets (this time with encryption enabled), moving the data from the original plain datasets to the new encrypted ones, then removing the old datasets. Additionally, because encryption is a property that can only be set at the dataset level (and not the pool level), we will need to perform this operation at least three times (one for each of the three highest datasets described previously). Thankfully, because encryption is inherited to child datasets, we do not need to perform this for all the datasets contained in our pool, nor do we need to do this for future child datasets.

Encrypting the ROOT dataset

The first dataset we will encrypt is rpool/ROOT. Encrypting this dataset rather than its child rpool/ROOT/pve-1 will ensure that all its future children will also inherit the encryption property. Because this is the dataset containing the root filesystem, it is not possible to encrypt it while it is booted into. The easiest way to circumvent this, is by performing this operation from within the initramfs used by the system early-on during the boot process.

Put simply, an initramfs, is an archive which contains a rudimentary root filesystem that is needed during the initial boot of a *nix system. This archive contains the modules, drivers, and other miscellaneous scripts and binaries necessary in order to load any devices or peripherals that are needed to boot the filesystem. Examples of scenarios where such modules could be needed include the root file system being hosted on a RAID array, a network storage, encrypted disk, and as is the case for our Proxmox system: The root filesystem living in a ZFS dataset. During the Linux boot process the initramfs acts as a temporary root filesystem, which contains all the modules necessary to mount the main root filesystem. Once the main root filesystem is mounted, it is switched into and the boot process is continued from there, by starting the init process6.

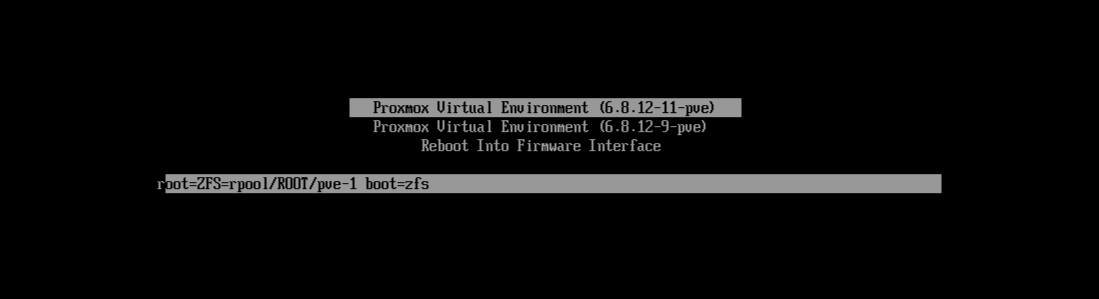

In order to encrypt the ROOT dataset from within the initramfs, we will reboot Proxmox and interrupt the boot process right after the initramfs has been loaded into memory and mounted as the root filesystem, but before the system has had a chance to mount the main root filesystem. This will leave us in a state where the root dataset is not mounted yet (thus enabling us to freely encrypt it), along with all the tools we need to actually perform the encryption (the zfs module included in the loaded initramfs). An easy way to interrupt this boot process is to press the e key on Proxmox’s bootloader selection menu (this will require physical access to the Proxmox server). After doing so a new line will appear in the text interface showing the kernel parameters used by Proxmox for booting the Linux Kernel. Figure 2 shows the result of interrupting the boot process at the bootloader’s selection screen.

Figure 2: Proxmox’s bootloader selection screen (The bottom line only appears after pressing the

Figure 2: Proxmox’s bootloader selection screen (The bottom line only appears after pressing the e key

Without delving too deep into it, kernel parameters are passed by the boot loader to the Linux Kernel during the boot process as a way to pass options to it and control its behavior. Figure 2 shows that the Proxmox bootloader is defining two kernel parameters:

root: This tells the Kernel where to find the root filesystem. The valueZFS=rpool/ROOT/pve-1tells the Kernel that the root filesystem is located on aZFSpool, and specifies the pool and dataset where it is to be found.boot: I couldn’t find much documentation about this kernel parameter, my understanding is that it is used by the Proxmox bootloader to indicate that the system will boot from a ZFS root filesystem. However, I don’t find this explanation convincing because therootparameter already indicates this…

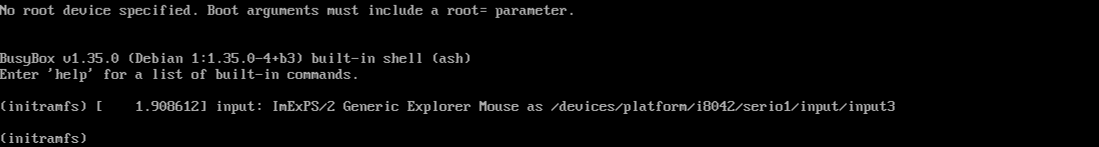

In order to interrupt the boot process, we will edit the kernel parameters above, and simply delete them. This will cause the initramfs to fail to find an appropriate filesystem to mount as root, making it then fall back to a minimalist shell which is supposed to prompt the user for the next step to take. This is shown in Figure 3.

Figure 3: shell prompt after interrupting the boot process

Figure 3: shell prompt after interrupting the boot process

We now can finally start getting busy with our ZFS pool, without having to worry about it being mounted as the root filesystem. Before we can start tinkering with our ZFS pool though, we need to load the zfs kernel module which contains all the ZFS functionalities and commands we need for our tinkering. This can be achieved by running the following command:

1

modprobe zfs

We now need to import the rpool ZFS pool so we can start tinkering with it:

1

zpool import -f rpool

Now we can finally get started and encrypt the rpool/ROOTdataset. As mentioned earlier, ZFS only allows to set the encryption property of a pool or dataset on creation. For this reason, what we will need to do is:

- Create a new dataset with the encryption property enabled.

- Move our data from the old non-encrypted root dataset to the newly created encrypted one.

- Delete the old root dataset.

In practice, the sequence of steps to follow is a bit more involved than the simple three steps above. To understand why that is, let’s take a look at how achieving the above looks like on the command line:

Figure 4: Step 1 - Creating a snapshot of

Figure 4: Step 1 - Creating a snapshot of rpool/ROOT

- Step 1: First we will create a snapshot of the

rpool/ROOTdataset. This snapshot will point to the current state of ourrpool/ROOTdataset on disk, and we will make use of it in a bit when it comes time to encrypt this data. We will call the snapshotcopy, and the structure of our pool following the snapshot is illustrated in Figure 4. Note that the snapshot of the parent datasetROOTincludes a snapshot of the child datasetpve-1.

In order to create the snapshot as indicated, the command to run is the following:

1

zfs snapshot -r rpool/ROOT@copy - Step 2: We cannot replace the

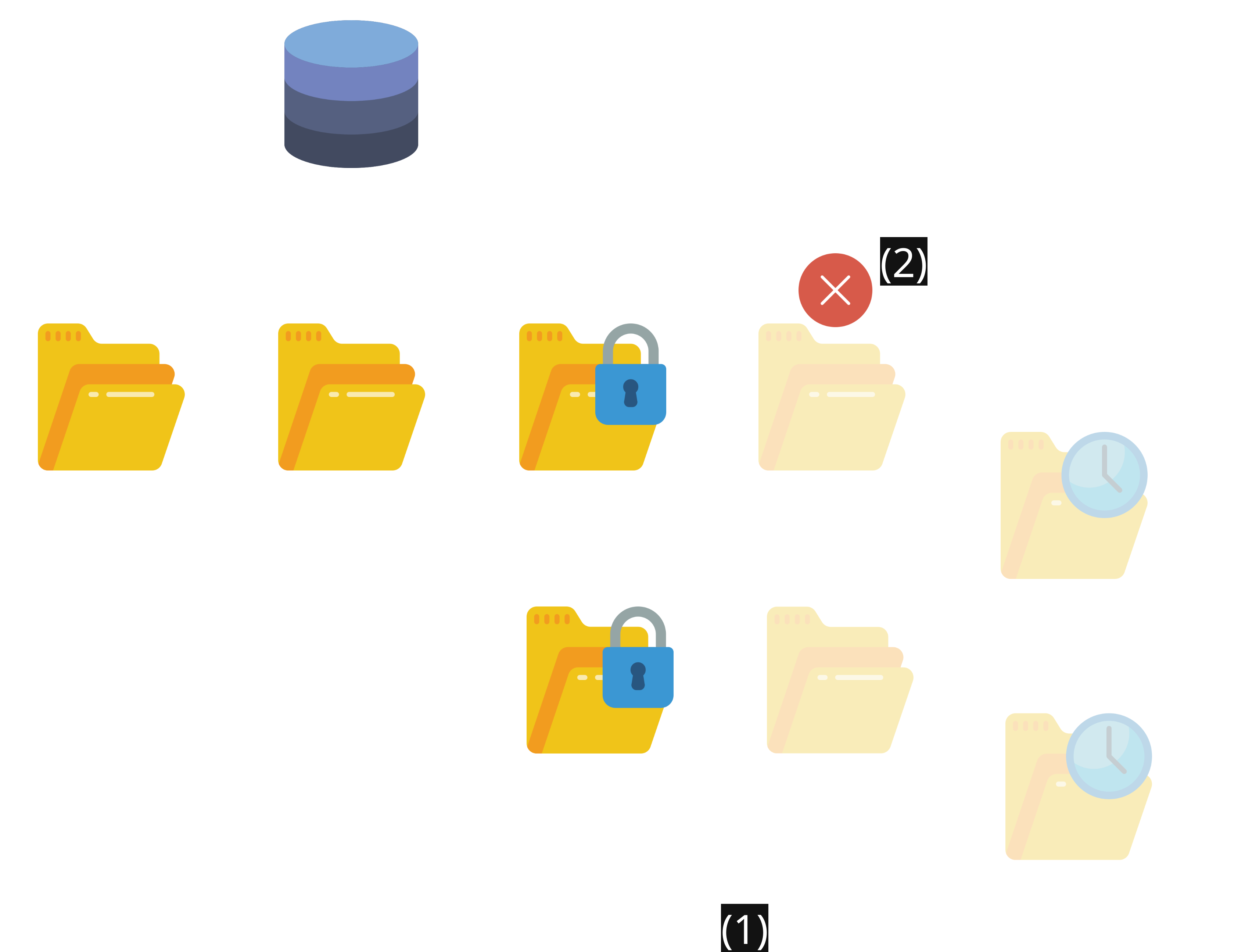

rpool/ROOTdataset in place with a new dataset which supports encryption (this is not possible to do in ZFS). For this reason, we will create a temporary root dataset, to which we will move our data. We will call this new datasetcopyroot, and will make use of ZFS’ssend/receivecommands to construct it using the snapshot created in Step 1. With our data safely copied to the new datasetcopyroot, we can go ahead and delete the originalROOTdataset with thezfs destroycommand. The structure of the ZFS pool following the creation of the newcopyrootdataset along with the deletion of the originalROOTdataset is shown in Figure 5. The commands to run are:1 2

zfs send -R rpool/ROOT@copy | zfs receive rpool/copyroot zfs destroy -r rpool/ROOT

Figure 6: Step 3 - Creating the encrypted

Figure 6: Step 3 - Creating the encrypted ROOT dataset

- Step 3: We can now create a new

rpool/ROOTdataset, this time with encryption enabled. However, before we do so I will take the opportunity to set theautotrimproperty to ON onrpool. This property instructs the pool to inform the underlying disk whenever space is no longer allocated by the pool, so that the disk can go ahead and reclaim the unused blocks by the freed space. It is to note that this is only supported on devices that implement this feature (e.g. SSDs). The command to run in order to enable theautotrimproperty is:

1

zpool set autotrim=on rpool

We can now create the new

rpool/ROOTdataset with enabled encryption. For this we will use thezfs createcommand with the options-o encryption=onand-o keyformat=passphrase. Thekeyformatoption can be set to eitherpassphrase,hex, orraw. In the case ofhexorraw, the passphrase must be exactly 32 bytes long, and given either as a human readable hex-string, or raw byte-string respectively7. The valuepassphraseallows for setting a passphrase using human readable text.

Figure 6 shows the newly created and encryptedROOTdataset, and below is the command used to create this new dataset:1 2 3 4

zfs create \ -o encryption=on -o keyformat=passphrase \ -o acltype=posix -o xattr=sa -o atime=off -o checksum=blake3 -o overlay=off \ rpool/ROOT

The command above sets some additional properties on the newly created dataset, which I found to be generally recommended online. These are as follows:

acltype=posixandxattr=sa:acltypeinforms ZFS whether Access Control Lists are used by the operating system. These constitute an additional mechanism to control permissions on a filesystem. The valueposixinforms ZFS that ACLs are to be used, and furthermore that it is the POSIX variant (which are specific to Linux) that will be used. The ZFS documentation recommends settingxattrtosawhen using POSIX ACLs, because it inform ZFS to store the ACL information of a specific file (inode) as part of the file itself (rather than as a separate meta-data file). This allows ZFS to inform the OS about a file’s ACL with a single read (rather than at least two) but is not supported by all operating systems8. If you are not going to make use of ACLs, then you can ignore this option.atime=off: Allows disabling tracking of files’ access-time on the dataset, as such a file being accessed will not cause ZFS to flag the file as modified. Our scenario does not require keeping track of files’ modification times, and omitting this attribute will translate into better I/O performance (since the OS will not need to write new access times to each file after it has simply been read).checksum=blake3: As of this writing, the default checksumming algorithm used by ZFS isfletcher4.blake3is a modern cryptographic hash algorithm which was introduced to OpenZFS in release 2.2. I am not entirely sure if switching to it would improve or deteriorate my performance in any measurable way, but thought it wouldn’t harm to try it.overlay=off: Setting theoverlayproperty tooffprevents ZFS from mounting a dataset on a non-empty directory. I do not fully understand this feature yet, but found a couple mentions online recommending to set it tooffin containerized environments.

Once you execute the command above, you will be prompted for your passphrase. I couldn’t find any references explicitly stating whether ZFS supports

utf-8or other Unicode encodings, so to be safe, it’s probably a good idea to limit the characters of your passphrase to the ASCII set. Passphrases can be between 8 and 512 bytes though, so it is still possible to define complex enough passphrases, even with such a limited character space.

Figure 7: Step 4 - Copying the data into the encrypted

Figure 7: Step 4 - Copying the data into the encrypted ROOT

and deleting copyroot

- Step 4: With the new encrypted

ROOTdataset now created, we can go ahead and copy the content of the temporarycopyrootdataset into its new permanent destination. Similar to Step 2 we will again make use of ZFS’ssend/receivecommands. Once the data is copied, we can go ahead and delete thecopyrootdataset. This is shown in Figure 7.

Withcopyrootnow deleted, we will configure themountpointof our new permanent root filesystem as/. The final step before rebooting the system is to exportrpool. The commands to execute for this step are:1 2 3 4

zfs send -R rpool/copyroot/pve-1@copy | zfs receive -o encryption=on rpool/ROOT/pve-1 zfs destroy -r rpool/copyroot zfs set mountpoint=/ rpool/ROOT/pve-1 zpool export rpool

Note that when issuing the

send/receivecommand, you might get the warning message:cannot mount '/': directory is not empty. This happens because the datasetrpool/copyroothas its mountpoint set to/(since this property was copied from the originalROOTdataset. However at the time we are issuing the command, the root directory/is already populated by our initramfs root filesystem. A similar warning message might be returned when setting the mountpoint of our newly populatedrpool/ROOT/pve-1dataset, and ZFS might also complain with the messageproperty may be set but unable to remount filesystem. Both warning messages can be ignored.

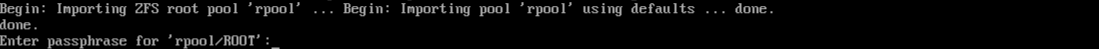

By this point, all is set for the new encrypted ROOT to be used by Proxmox for the next boot. To reboot the system from the initramfs’ shell, the command to run is reboot -f. On the next boot you should be prompted for the passphrase of the now encrypted ZFS root dataset, this is shown in Figure 8.

Figure 8: ZFS encryption passphrase prompt

Figure 8: ZFS encryption passphrase prompt

Encrypting the remaining datasets

The steps we have gone through in the previous section have allowed us to encrypt the rpool/ROOT dataset. This leaves us with the two other datasets rpool/data and rpool/var-lib-vz to also encrypt. The steps to achieve this are the same as what we described in the previous section, with one small difference.

If we were to also encrypt the two datasets rpool/data and rpool/var-lib-vz using a passphrase, it would require us typing three passphrases (for the three encrypted datasets) on each boot. This is obviously not an optimal situation. What we can do instead is store the passphrases for these two datasets in separate files. We can then simply inform ZFS where the files containing the passphrases are to be found. We can keep these files safe by storing them in the root filesystem which is encrypted, this way, they only become available to ZFS on boot after we unlock the root filesystem. This leaves us with a single passphrase to type manually at boot.

As mentioned, the steps to follow to encrypt the two remaining datasets are the same as those followed to encrypt the root dataset. If we stop any currently running VMs or containers in Proxmox we can in fact perform these steps from within Proxmox (instead of the initramfs). The only differences are as follows:

- Before we start encrypting the two datasets, we can create a directory

/keysin which we will store our encryption passphrases. In this directory, we will create two filesdata.keyandvr-lib-vz.keyeach of which will store the passphrase for the corresponding dataset. After generating some random passphrases and saving them to these files, we can go ahead and make the files readable only by root, and immutable:1 2

chmod 400 /keys/data.key /keys/vr-lib-vz.key chattr +i /keys/data.key /keys/vr-lib-vz.key - In Step 3 when it comes time to create the new encrypted datasets, we need to pass an additional

keylocationargument to thezfs createcommand. The argument’s value must specify the location of the key file. For example, in the case of therpool/datadataset, the argument’s value should befile:///keys/data.key, and thezfs createcommand would looks like:1 2 3 4

zfs create \ -o encryption=on -o keyformat=passphrase -o keylocation=file:///keys/data.key \ -o acltype=posix -o xattr=sa -o atime=off -o checksum=blake3 -o overlay=off \ rpool/data

A very important additional step that is needed following the steps of the previous section, is to make sure the configured encryption keys are loaded by ZFS before it attempts to mount the datasets. This step is necessary because in contrast to the rpool/ROOT dataset, for which the passphrase is input by the user during boot, the passphrase for the rpool/data and rpool/vr-lib-vz is not. ZFS already knows about the location of these keys (through the keylocation argument used when creating the datasets), we simply need to tell ZFS to load these keys before it starts attempting to mount the corresponding datasets. We can achieve this using a systemd service. To do so we will create a file /etc/systemd/system/zfs-load-key.service with the following content:

1

2

3

4

5

6

7

8

9

10

11

12

13

[Unit]

Description=Load encryption keys

DefaultDependencies=no

After=zfs-import.target

Before=zfs-mount.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/usr/sbin/zfs load-key -a

[Install]

WantedBy=zfs-mount.service

This service file basically makes sure the command zfs load-key -a runs before the zfs-mount service. The service zfs-mount is set by the system when it knows that ZFS is used, and handles mounting all available ZFS filesystems. The zfs load-key with the -a option loads the keys for all encrypted datasets in all pools imported by the system9. Once the service file with the content above is created, we can enable this service using the following command:

1

systemctl enable zfs-load-key

Now on subsequent boots, the newly created zfs-load-key service should handle loading the encryption passphrases for the rpool/data and rpool/ vr-lib-bz datasets. Once the system is booted, we can check that the keys were loaded correctly by checking the status of the service:

1

2

3

4

5

6

7

systemctl status zfs-load-key

● zfs-load-key.service - Load encryption keys

<...shortened for readability >

Jun 09 14:18:05 Proxmox systemd[1]: Starting zfs-load-key.service - Load encryption keys...

Jun 09 14:18:05 Proxmox zfs[719]: 2 / 2 key(s) successfully loaded

Jun 09 14:18:05 Proxmox systemd[1]: Finished zfs-load-key.service - Load encryption keys.

Similarity, you can check if the zfs-mount service was able to mount all datasets and finish successfully. In my case I was surprised to find that this was not the case:

1

2

3

4

5

6

7

8

9

systemctl status zfs-mount

× zfs-mount.service - Mount ZFS filesystems

<...shortened for readability >

Jun 09 14:18:05 Proxmox systemd[1]: Starting zfs-mount.service - Mount ZFS filesystems...

Jun 09 14:18:05 Proxmox zfs[729]: cannot mount '/var/lib/vz': directory is not empty

Jun 09 14:18:05 Proxmox systemd[1]: zfs-mount.service: Main process exited, code=exited, status=1/FAILURE

Jun 09 14:18:05 Proxmox systemd[1]: zfs-mount.service: Failed with result 'exit-code'.

Jun 09 14:18:05 Proxmox systemd[1]: Failed to start zfs-mount.service - Mount ZFS filesystems.

Looking through the service log, it seems ZFS was not successful in mounting the rpool/var-lib-vz service because its mountpoint /var/lib/vz was not empty. Looking into the content of this directory reveals that it is indeed not empty:

1

2

ls /var/lib/vz

dump images private snippets template

Investigating the content of these directories individually, revealed that they were empty. It seems that after I had destroyed the plain rpool/var-lib-vz dataset, Proxmox noticed that its mountpoint /var/lib/vz had become empty and then attempted to create the empty skeleton of directories usually stored there. This might be because I was using the Proxmox web interface as I was following the encryption steps, and thus triggered this operation, or it might be something that Proxmox checks for regularly. Whatever the reason might have been, fixing this issue is as easy as simply deleting the content of /var/lib/vz and telling ZFS to mount var-lib-vz again:

1

2

rm rf /var/lib/vz/*

zfs mount rpool/var/lib/vz

Doing this will also solve the issue on subsequent boots. We can now restart the zfs-mount service, and make sure that this time, it encountered no issues:

1

2

3

4

5

6

7

systemctl restart zfs-mount

systemctl status zfs-mount

● zfs-mount.service - Mount ZFS filesystems

<...shortened for readability >

Jun 09 14:39:01 Proxmox systemd[1]: Starting zfs-mount.service - Mount ZFS filesystems...

Jun 09 14:39:01 Proxmox systemd[1]: Finished zfs-mount.service - Mount ZFS filesystems.

Setting up dropbear for easy unlock on boot

As our Proxmox system is currently set, it requires to manually type-in the passphrase of the root dataset at each boot. If you are like me and would rather have your Proxmox machine tucked away and running headless, having to find a monitor and keyboard each time you perform a reboot is not a perfect situation. Thankfully, it is possible to type the passphrase remotely by making use of the dropbear software package. Dropbear, is a minimalist SSH server and client, which was designed for environments with limited resources (e.g. Embedded systems)10. We will be making use of the dropbear-initramfs package, which offers Dropbear integration into Linux initramfs. Meaning it makes it possible to remotely connect through SSH to the initramfs environment at the point where the root ZFS dataset needs to be unlocked, and the passphrase needs to be typed-in. To install dropbear-initramfs we will use the command:

1

apt-get --assume-yes --no-install-recommends install dropbear-initramfs

The --no-install-recommends avoids installing the recommended cryptsetup package which we have no use for since we are using ZFS native encryption11. After installing dropbear-initramfs, we need to configure it by adding the following line to the file /etc/dropbear/initramfs/dropbear.conf:

1

DROPBEAR_OPTIONS="-p 4748 -s -j -k"

The line above defines the following options for dropbear-initramfs:

-p 4748: Is the TCP port to be used by thedropbearSSH server. Choosing a port that is different than SSH’s default port22would avoid fingerprint warnings from an SSH client that is meant to connect both to thedropbear-initramfsserver, and the Proxmox server (which effectively use the same IP address).-s: Disable password logins.-j: Disable local port forwarding.-k: Disable remote port forwarding.

The next file to configure is /etc/dropbear/initramfs/authorized_keys. There we need to add the public key we will be using to connect to the dropbear SSH server. We can also define key-specific limitations in this file:

1

no-port-forwarding,no-agent-forwarding,no-x11-forwarding,command="/usr/bin/zfsunlock" <your ssh public key goes here>

The configuration above disables port-, agent- and X11-forwarding for the key we are authorizing. Furthermore, we also restrict the usage of this key, by forcing the execution of the command zfsunlock whenever this key is used to connect to the server, thus denying the user the possibility to do anything else when connecting to the dropbear-initramfs SSH server using this key.

Once the dropbear-initramfs package is configured, we can go ahead an regenerate the initramfs in order for our modifications to be integrated to it:

1

update-initramfs -u

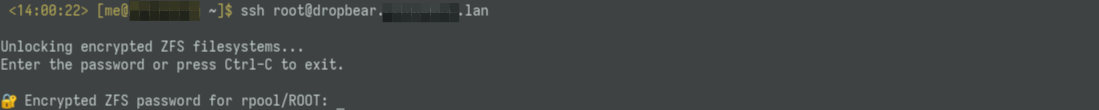

We can now reboot the system. During the boot process the initramfs will be loaded and the dropbear SSH server started. The kernel will then attempt to mount the root ZFS dataset, will prompt the use for a passphrase and wait for input. This time however, we can remotely connect to the dropbear-initramfs SSH server as root, this will force the execution of the zfsunlock command, and will automatically prompt us for the root dataset’s passphrase:  Figure 9:

Figure 9: zfsunlock prompt through the dropbear SSH session

Once the passphrase is input, the initramfs can mount the root filesystem, the dropbear-initramfs session is ended, and the boot process proceeds as before.

Conclusions

Through the steps detailed in this article, we were able to take a Proxmox system from its default ZFS layout and turn it into a more secure system using ZFS’s built-in encryption feature. We were also able to make use of dropbear-initramfs in order make remote unlocking of the now encrypted root ZFS dataset possible. Although this all sounds well and good, there are a couple caveats one must remain aware of when attempting to follow these steps.

The first thing to keep in mind is that our method of encrypting data relied on creating an encrypted ZFS dataset, copying our data into in, then “deleting” the original data from the disk. I am using quotes around the word “deleting”, because the actual bits representing the data, simply have been de-indexed by ZFS and not securely overwritten. As such, with enough resources and digital forensic knowledge, it probably would not be too hard for an attacker that is motivated enough to retrieve the original data. For this reason, the article which inspired this one recommends to perform the steps above after a fresh installation of Proxmox11, that way no sensitive data would have made it to the disk prior to the encryption steps.

Additionally, ZFS’s native encryption is on the dataset level, and as such is not completely opaque. If an attacker gets their hand on the disk, they will not be able to see the content of the encrypted datasets, but they would be able to see the ZFS pool, and the datasets which make it up. This is different from the case of full disk encryption in which, to an attacker who has gotten their hand on the drive, its content would look indistinguishable from high-entropy random data. Whether this is a risk to you at all depends on the nature of your data, and your threat model. In my case, I believe that it is an acceptable level of risk, considering the drawbacks of the alternatives discussed at the beginning of this article, and seeing as the datasets themselves are encrypted.