My first computer build: Part 3 - Memory

So far in this series we have gone through the reasoning behind my choices for the CPU and motherboard, as well as the case and cooler. In today’s article, we will be focusing our attention on RAM, and the different aspects I had to take into consideration before buying a memory kit for my build.

Our discussion will start with the aspect most easy to understand, namely the size of the memory. From there, we will look into the concept of memory frequency, which will then lead us to a discussion about memory timings and latency. In order to gain a better understanding of overall memory performance, we will take a deeper look into memory channels, ranks, and topology. Finally, like all previous installments, I will close this article by revealing the ram model I ended up choosing for my build.

Size

The first thing I needed to settle on was the total size of RAM I wanted to go with. I have previously laid down the intended use-cases of my system (see the Motivation section all the way back in the first installment of this series). As a quick reminder, virtualization, compilation jobs and 3D rendering are some of the main use-cases for my system. Those three use-cases all make heavy use of memory so I knew I would need a relatively large kit.

My reasoning was the following: One of my intended uses of the system (in the context of virtualization) is to make use of PCI-passthrough to be able to game on a Windows virtual machine, while being able to do other things on my host OS. Under such a scenario, at least 16GB of RAM would be dedicated to the gaming virtual machine, as that is usually the recommended amount of RAM for a gaming setup1.

For this reason 16GB was already out of the question. The minimum amount of RAM I could get away with was 32GB. Ultimately, I decided to go with 64GB of RAM to be on the safe (if not overkill) side. I want to be able to run as many virtual machines as I might need, without feeling the hit in available performance to my host system. The 64GB of RAM in combination with the 12 cores (24 threads) of my Ryzen 9 5900X CPU would allow me to achieve that.

Frequency

After settling on the amount of RAM I needed, the next task was to figure out what frequency, or frequency range I was to be aiming for. The first thing I quickly learned, was that the frequencies advertised by vendors on their memory modules do not reflect the real operating frequencies of said modules. This is because current memory is built upon DDR SDRAM technology, which stands for Double Data Rate Synchronous Dynamic Random-Access Memory. Let’s dissect that acronym:

- Random-Access: Refers to the fact that the memory can be read and written in any order, and that these operations will always take the same amount of time. This in contrast to other forms of data storage for which the read/write time depends on the locations at which, these operations are conducted (e.g. hard drives).

- Dynamic: Meaning memory cells require constant refresh cycles in order to maintain their content. This requirement stems from the fact that the main building block of Dynamic Random-Access Memory consists of one transistor and one capacitor. The capacitor is discharged at each access thus requiring a recharge in order to maintain the value of the bit it is holding2. This contrasts with Static memory which is built upon a more complicated flip-flop circuit (made out of six transistors), which does not require a refresh after each access3.

- Synchronous: Meaning that memory operations occur following a clock signal.

- Double Data Rate: This means that, memory operations occur twice per clock cycle, on both the rising and falling edges of the clock signal. This contrasts with Single Data Rate memory where such operations occur only once per cycle, either always on the rising or always on the falling edges. Figure 14 illustrates this difference.

Figure 1: SDR vs DDR signals per clock cycle

Figure 1: SDR vs DDR signals per clock cycle

The part of this acronym most relevant to the difference in RAM advertised frequency vs. operational frequency is DDR. The operational frequency of DDR RAM is that of the clock it is using. However, since both the rising and falling edges of the clock signal are used, the apparent transfer rate actually equals double the clock frequency. Vendors advertise this last value as the effective memory frequency in Hz (or MHz) , even though it reflects the number of transfers carried out per second, and as such, should be given in T/s (or MT/s). This value does not reflect the actual clock speed of the memory module. In order to avoid confusion, from this point on, I will be referring to the memory’s operational frequency (in MHz) as memory clock frequency, and will adopt the industry’s (technically inaccurate) use of MHz to refer to the memory’s transfer rate (instead of the technically more accurate MT/s). The importance of this distinction will become clearer before the end of this section I hope.

Now, with our improved understanding of RAM frequency, let us get back to the original question of figuring out what frequency to aim for when shopping for RAM. As a reminder, my build is centered around a Ryzen 9 5900X CPU. The main aspect which determines the optimal memory frequency for this processor is the topology used in its Zen3 micro-architecture. Figure 25 shows such a topology for a multi-CCD processor (such as my Ryzen 5900X). What is most relevant to us is the path which data will need to take when moved between the CCXs (The Core Complex, i.e. the CPU cores) and the DRAM modules. This path crosses three main components, namely:

- The Infinity Fabric (IF): Leaving the core complex, data is first transferred to the infinity fabric, which is the communication interconnect architecture used on the Zen micro-architecture6. Its role is to allow communications between the different chiplets on a Zen-based processor.

- The Unified Memory Controller (UMC): On its way out of the processor, data is communicated to the I/O chiplet die (cIOD). In particular, data destined to the memory, will be transferred to the UMC Which is the CPU’s main interface to memory.

- The DRAM modules: Finally, data destined to the memory needs to be transferred to the DRAM modules themselves.

Figure 2: Zen3 topology for a two-CCD processor

Figure 2: Zen3 topology for a two-CCD processor

Each of these three components has its own clock signal, and as such exists in a separate clock domain7. However, for optimal operation all three need to run at the same frequency, this is referred to as 1:1 mode (sometimes also as 1:1:1 mode). Default motherboard BIOS settings make it so that these three components are automatically synced to run at the same frequency. However, this mode is maintainable only up to memory transfer rates of 3600MHz89 (i.e. an effective memory clock of 1800MHz). Passed this point, the Infinity Fabric’s clock is decoupled from memory clock, and remains set at 1800MHz. Additionally, the Unified Memory Controller’s frequency drops to half that of the DRAM module910. This is referred to as 1:2 mode (confusingly, it is also sometimes referred to as 2:1 mode). Because the three components run at different clock frequencies in this mode, a synchronization between their different frequency domains is required whenever data is transferred from one domain to the other. This synchronization incurs a penalty in the raw memory latency.

Figure 35 showcases the effect of this frequency decoupling on memory latency. The first thing to notice is that contrary to what I mentioned in the last paragraph, the limit frequency at which the transition between the two modes occurs is not exactly 3600MHz. The 3600MHz value is given by AMD as a guaranteed value up to which 1:1 mode will always be maintained on all their Zen3 CPUs. However, due to silicon lottery some units might be able to maintain this mode at even higher frequencies (e.g. of a Ryzen 7 5900X maintaining 1:1 ratio using a DDR-4000MHz kit). The fact is, AMD cannot guarantee such a behavior on all units. Going back to Figure 3, the X-axis of the graph includes latency values in addition to frequency values (these values are preceded by the letter C). We will ignore the effect of latency for now, leaving that discussion for the next section. Progressing from the left to the right of the graph, we notice that as the frequency rises, the raw memory latency keeps dropping, thus improving the overall memory performance. This is because the memory system (i.e. the Infinity fabric + the memory controller) is able to match the RAM’s frequency, thus the use of faster memory, results in a faster system, and lower latency. This continues up until 1:1 Mode is no longer maintainable by the memory system. Using memory with a frequency higher than this value pushes the memory system into 2:1 mode. As mentioned before, in this mode, the Infinity fabric decouples its frequency from that of the memory, limiting it to 1800MHz, and the memory controller runs at half the memory frequency. This frequency decoupling results in an overall increase in the memory latency, i.e. a drop in performance. Depending on the load, this can cause an overall memory latency increase of up to 15% 9. Progressing further to the right of this decoupling point, using even faster memory actually results in a gradual decrease in memory latency. However, it requires memory frequencies in the 4400MHz range before we start seeing latencies as good as those experienced with slower memory frequencies running in 1:1 mode.

Figure 3: Effect on memory frequency on raw latency for Ryzen 5 processors

Figure 3: Effect on memory frequency on raw latency for Ryzen 5 processors

So, what is the takeaway from all of this? What Frequency should I be aiming for when shopping for memory? The answer should be clear by now, anything bellow the 3600MHz limit, should allow me to keep my memory system in the more efficient 1:1 Mode. Because I want the most performance out of my system, I should actually be aiming at 3600MHz, since that is the highest frequency under which 1:1 Mode is guaranteed to be maintained. As a matter of fact, this is the AMD-recommended memory-frequency for the Zen3 family of processors5. To give myself a price margin, My aim will be at memory kits with rated frequencies in the range of 3200MHz to 3600MHz.

With that being said, and as we slightly touched upon when analyzing Figure 3, frequency only partly answers the question of memory performance. In order to see the full picture, we need to learn about latency.

Latency and timings

Memory frequency reflects the number of operations per second that can be sent to and fro memory.

As DDR memory is synchronous, this frequency is exactly the clock frequency of the memory.

However, a memory transaction, e.g. accessing memory column, will take multiple transactions before completion.

As such, in order calculate the actual memory latency, meaning the time in seconds (or ns) it takes to read data from, and write it to memory, we need to be aware of both its frequency, and its timings.

Memory timings refer to values which give the number of clock cycles it takes the memory module to achieve a given set of basic memory operations.

As such, these timings are given in units of number of clock cycles.

The combined knowledge of a module’s frequency and timings allow to calculate its latency using the formula:

Timing (in Clock cycles) / Clock frequency (in Hz) = Latency (in s).

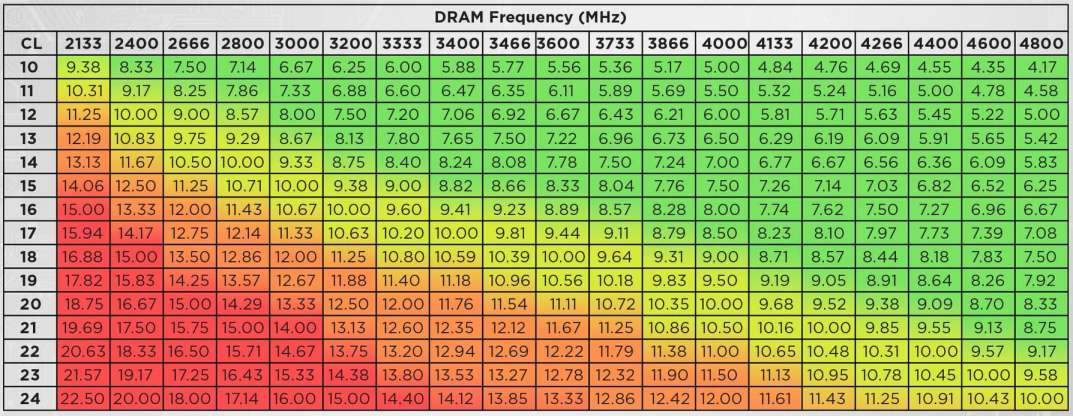

Memory vendors include four timings in the specification sheet (and usually on the packaging) of their RAM modules. These timings reflect the duration of four different memory operations11. I will not cover all four timings, as I don’t think knowing what exact operation each timing refers to is pertinent knowledge. However, I will cover the first of these timings, namely the CAS latency (or CL). This timing reflects the exact number of cycles between the moment the address of a memory column is sent to memory, and the moment the memory replies with data of that column. Out of the four memory timings, this is probably the most relevant. In fact, going back to to Figure 3, the latency giving on the X-axis (attached to each frequency after the C), is exactly the CAS latency. Combining the CAS latency with the memory frequency, and using the formula from above, one can calculate the First word latency, i.e. the time in ns it takes the memory to deliver the first bit of data after it has been asked for it. Figure 412, lists the calculated first word latency for a wide range of frequencies and CAS Latency values. Do note however, that in order to obtain the values in Figure 4, the clock frequency to use in the formula from above is half the advertised memory frequency (see our discussion in the frequency section if you are unsure why).

Figure 4: First word latency (ns) in function of the frequency (MHz) and CAS Latency (CLK cycles)

Figure 4: First word latency (ns) in function of the frequency (MHz) and CAS Latency (CLK cycles)

Although not a foolproof indicator, first word latency is a good and simple indicator of overall memory latency. The effect of CAS Latency on performance is pretty straight forward: At the same frequency, the lower the CAS latency the better the overall performance. This discussion can be had for the other three timings: At the same frequency, the lower the timing value, the better. Of course, the higher the rated frequency and the lower the timings of a memory kit are, the more expensive it will be. In general 3600MHz memory kits with CL16 latency are almost always recommended as being the sweet spot, for performance over price ratio for Ryzen 5000 systems. We had arrived at the 3600MHz value for memory frequency following our discussion in the previous frequency section. Now we know to also look for latency values around the 16 value.

RAM configuration

With the target size, frequency, and latency of the RAM settled, there was still the question of how all that RAM was to be configured. My motherboard has four slots of RAM, meaning my intended 64GB of RAM could be mounted as either 4 sticks of 16GB, or 2 sticks of 32GB each (Most single stick 64GB RAM kits are reserved for server use). The two configurations are not equal, and would result in different levels of performance. To understand why this is, I need to introduce the following aspects of memory and motherboard design.

Memory channels

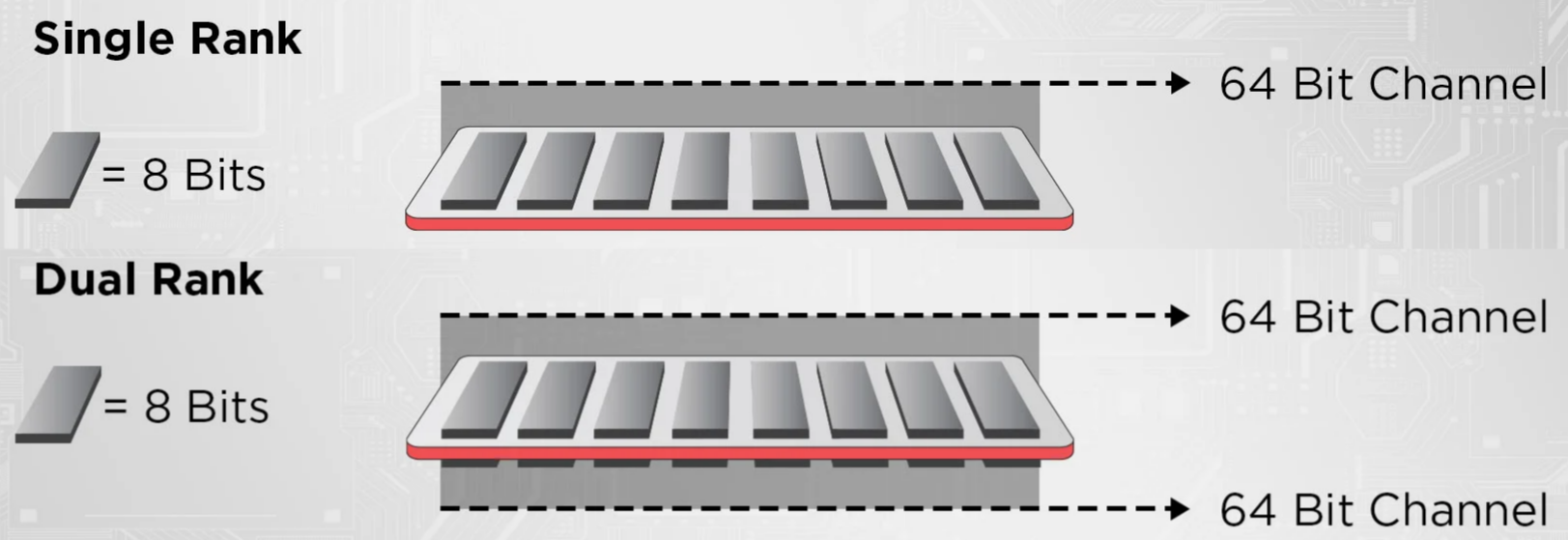

In almost all recent consumer motherboards, the communication bus connecting the CPU to the memory modules is configured in dual channel.

This means, that this bus is effectively composed of two independent channels.

In comparison with previous single channel configurations, dual channel, effectively doubles the theoretical maximum memory bandwidth (See Figure 513).

As current PC architectures use a 64bit-wide channel, dual-channel allows a total channel width of 2 x 64bits = 128bits between memory and CPU.

Figure 5: Single vs. dual channel memory

Figure 5: Single vs. dual channel memory

Dual-channel memory access is a property of the platform on which the memory is installed, and not the memory itself (meaning, there are no dual-, or single-channel memory modules). Practically, this means that dual-channel access needs to be supported by both the CPU and motherboard for it to be available. In my case, the Ryzen 9 5900X I am using in my build is part of the Ryzen 5000 line of processors, which supports dual-channel memory access14. Of course, in order to make use of dual channel support, both channels available on the motherboard need to be populated. This means that using a single memory stick on a motherboard which supports dual channel, will effectively result in single channel performance.

Higher multi-channel platforms do exist: quad-, hexa-, and octa- channel platforms respectively offer four, six, and eight independent channels for memory-CPU communication. However, quad-channel platforms are mostly reserved for servers and HEDT platforms (e.g. AMD’s Threadripper), whereas hexa- and octa- channel support is exclusively reserved for the server market segment15.

Consumer motherboards include either two or four memory slots. For motherboards with two slots, each slot is directly connected to its own channel. On the other hand, on motherboards with four memory slots, each channel is assigned two memory slots it is connected to. In this second scenario, when populating all memory slots with four modules, each two modules will be sharing one channel, thus effectively splitting its bandwidth. This could be seen as a first disadvantage of using four memory sticks vs. two, and in some regards, it is. However, this would be an incomplete conclusion as there is one other factor which controls the effective memory bandwidth perceived by every module, namely the memory rank.

Memory rank

A memory module (or stick), is a simple PCB which hosts DRAM chips. These memory chips are the building blocks of RAM and are characterized by their width (in bits) and their size/density (in Gbits). Current consumer market DRAM chips can have a width of 4, 8, or 16 bits, and sizes ranging from 1Gbit all the way to 16Gbits16. The width of a DRAM chip is usually expressed using the form xN, where N is the width in bits, e.g. a 1Gbit x4 chip is a DRAM chip with a capacity of 1Gbits, and a width of 4 bits.

A memory module is built using memory chips of the same size and width.

Originally, these were mounted only “in series”, this way, their widths would add up to the width of the memory bus.

As the width of the memory bus is 64 bits, this effectively puts a limit on the number of chips one can use on a memory module, which in turn limits the maximum capacity of the module.

As an example, considering a module which uses 1Gbit x8 chips, the maximum number of chips which can be mounted in series is: 64bits / (8 bits per chip) = 8 chips.

In the case x4 chips are used, the number of chips on the module becomes 64bits / (4 bits per chip) = 16 chips (See Figure 617).

In these scenarios, the maximum module capacity is 8 x 1Gbit = 1GByte (if the x8 chips are used) and 16 x 1Gbit = 2GBytes (for the x4 chips).

Figure 6: Comparison of memory modules using 8 x8 (top) vs. 16 x4 (bottom) DRAM chips

Figure 6: Comparison of memory modules using 8 x8 (top) vs. 16 x4 (bottom) DRAM chips

Memory modules with a capacity multiple times greater than the maximums calculated in the previous paragraph do exist, so how are those built? Well, we should note that in the calculations from the previous example, we used 1Gbit DRAM chips as building blocks, nowadays, 4Gbit and 8Gbit chips are more commonly used, with 16Gbit chips slowly being used by more manufacturers. Using higher density chips would definitely allow for higher capacity modules. However, such chips are usually more expensive, and this approach would also soon hit the same maximum capacity wall faced with lower density chips (this time at 32GBytes per module if 16Gbit chips are used). The solution memory manufacturers came up with in order to further improved the maximum capacity of memory modules is multi-rank memory design.

A memory rank, refers to all DRAM chips on a memory module that can be accessed simultaneously on the same memory bus18. In single-rank memory module, all DRAM chips are organized in the same single rank, meaning they are all mounted in series at the end of the memory bus and are all accessed simultaneously. This is the scenario we have been considering in our conversation so far. In dual-, quad- or octal- rank memory, the chips on the memory module are organized into two, four, or eight ranks respectively, this time mounted in parallel (see Figure 717). Because they are mounted in parallel, only one rank on a multi-rank memory module can be accessed at any given time. Within each rank, the DRAM chips are still mounted in series and connected to the end of the memory bus. This effectively allows to multiply the maximum capacity of the memory module by its memory-rank.

Figure 7: Single rank (top) vs. dual rank (bottom) layouts

Figure 7: Single rank (top) vs. dual rank (bottom) layouts

As we have just mentioned, although multi-rank memory allows for more capacity, this capacity is divided across the different ranks, with only one rank accessible at any given time. The question you should be asking yourself now is: wouldn’t this limited access, effectively negate the gain in capacity in such a design? In order to correctly answer that question we need to understand what rank interleaving is.

Rank interleaving refers to alternating the two main operations upon which all DRAM transactions are based, namely, refreshing and access. Refreshing is the act of writing a value into memory. This can refer to the writing of a new value that needs to be stored where a previous value not needed anymore is saved. Refreshing can also refer to the re-writing of the same value in place, in order to preserve it through the inherent volatility of SDRAM memory. Accessing the memory, simply refers to reading a value stored in it. During one memory clock-cycle, only one of these two operations can take place. Rank interleaving uses the fact that each rank on the memory module can be accessed independently of the other ranks, and schedules memory operations on these ranks so as to always alternate their refresh and access cycles. This way, when one rank is busy performing its refresh cycle, the other rank(s) are within their access cycle, and can thus be accessed. This results in effectively masking refresh cycles and making sure the memory channel is always kept busy.

Rank interleaving allows for a more effective memory bandwidth usage since it makes better use of the available memory channel. However, the scheduling required from the memory controller in order to alternate refresh and access calls to the different ranks introduces a new overhead which affects the final memory performance.

One finally thing to add concerning memory rank is that, the rank of a memory module is usually communicated by vendors using the notation: NR, where N is the rank (e.g. a 16GB 2Rx4 memory module, has a total 16GB capacity organized into two ranks of 4-bit wide DRAM chips). Figure 817 shows two memory labels displaying rank information using this notation. The labels Figure 8 also include information about the width of the used DRAM chips using the xN notation we mentioned earlier.

Figure 8: Examples of memory rank labeling

Figure 8: Examples of memory rank labeling

Memory topology

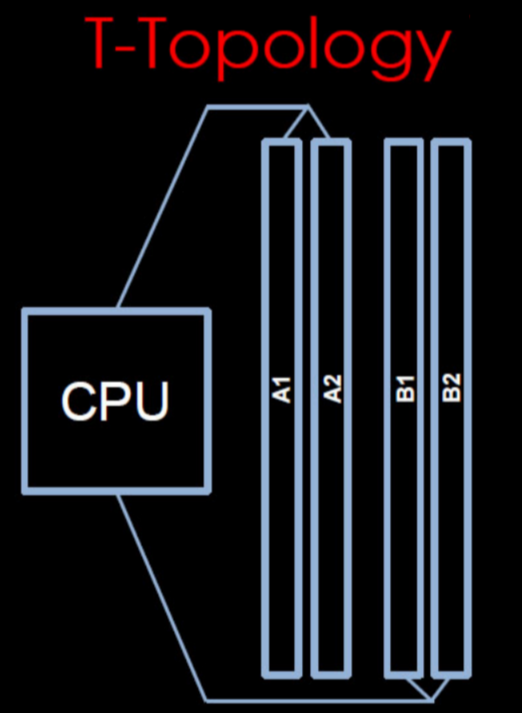

The final aspect of memory layout which will definitely affect the final RAM performance is the topology of the memory slots connections on the motherboard. This aspect is relevant for all dual-channel motherboards with four memory slots. As covered in the previous section, in this scenario, each two slots need to be electrically connected to one of the two channels on the memory bus. This can be achieved in one of two ways:

- T-topology: In this case, the electrical traces making each channel, split midway to the memory slots.

This results in the two slots connected to the same channel being equally distanced to the CPU (See Figure 910).

This has the advantage of electrical signals sent from the CPU taking the exact same time to reach the two modules connected to the two slots, thus making the channel more stable by eliminating (or at least lowering) clock skew19 between the two modules.

This topology clearly favors four memory sticks being connected. In the case where only two sticks are connected (one on every channel), the open-ended wire-endings on the empty branch of the topology will cause signal reflections to occur on the channel traces, thus deteriorating the signal quality on the populated branch. This results in a drop in the maximum frequency achievable on the channel, thus limiting the maximum attainable memory bandwidth.

- Daisy Chain topology: As the name indicates, in contrast to the T-topology, here the two memory slots are chained one after the other on the channel traces.

This can be seen in Figure 1010.

In this scenario, the two slots do not behave equally as is the case for T-topology.

The first thing to notice is that, the two slots are not equally distant from the CPU.

Furthermore, when populating the slots of a given channel, the first slot to be used should be the one at the end of the chain (in the case of Figure 10, that corresponds to either slots A2 or B2 ).

If the slot at the mid-point of the chain is populated first (slot A1 or B1 in Figure 10), its signal will suffer from the reflections caused by the open-ended trace endings leading to the un-populated slot.

Daisy chain topology works best with only two slots being populated (those at the end of the chain). If all of four slots are populated, the modules connected at the mid-point of the chain would still suffer from lower quality signals (though not as low as in the scenario where the other slots are not populated). This results in a decrease in the maximum frequencies that can be reached on daisy chain motherboards using four memory modules.

Finally, As I understand it, Daisy Chain is the topology most used for consumer motherboards. This is both because it is simpler, thus cheaper to implements and also because the two slots-configuration is the most common in this market segment10. T-topology seems to be reserved for few high performance motherboard models.

Performance discussion

Having endured the long discussion above about memory channels, ranks and topology, we now can attempt an educated discussion about the performance differences one would expect when using different memory configurations on different platforms and under different loads.

Let us start with memory-channel considerations. As we mentioned above, practically all current consumer platforms implement dual-channel memory access, so we will not discuss any other multi-channel setups. Dual-channel configuration allows to double the available bandwidth between memory and CPU. However, whether that bandwidth is made use of depends on the type of the CPU load. Surprisingly (to me at least), it seems like gaming, and normal office-use loads don’t particularly benefit from dual-channel memory access, with only few percents of improvements being observed in some tests20. In contrast, CPU and memory heavy applications (such as simulation) can see double digits improvements when switching from single to dual-channel20. A similar trend is to be expected for multi-threaded applications (such as virtualization).

As we have also mentioned earlier, on dual-channel platforms, using either two or four slots would not result in the same performance. When we first mentioned this, our initial assumption was that four modules would perform worse than two when mounted on a two-channel platform. We can now complement that conclusion with what we have learned about memory ranks and layout.

We will limit our conversation to single- and dual-rank memory, as quad-rank and higher memory modules are reserved to the HEDT and server segment. If we consider the four modules scenario, one can use either one of two configurations:

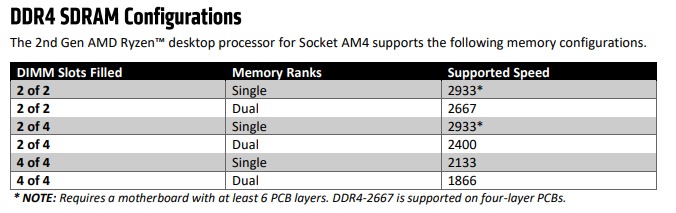

- Four dual-rank modules: This configuration would probably be used when a high amount of memory is needed on the system. As we mentioned earlier, dual-rank modules allow for a higher capacity, a kit of four dual-rank modules would allow for the maximum amount of capacity. However, the main drawback of such a configuration is the limited reachable maximum frequency. Using four modules will always lower the supported frequency in comparison to using just two modules. This is because it is simply more complicated to sync four PCBs to the same frequency as it is to sync only two. On top of that, we have mentioned previously that interleaving introduces more overhead on the memory controller. This overhead is worsened when two dual-rank modules are present on each channel.

- Four single-rank modules: Interestingly enough, four single-rank modules, will behave exactly as two dual-rank modules. In this scenario the CPU will perform rank-interleaving. The only difference being that the ranks being interleaved would reside on different modules (on the same channel). This means that each two modules sharing one channel, would be alternating their access and refresh cycles so as to make the most use of the memory channel, instead of having to “split” its bandwidth. As such, one would expect similar performance between four-slot single-rank and two-slot dual-rank kits. The only difference being that, in contrast to two-slot dual-rank kits, a four-slot single-rank kit would require the frequency to be synced across four PCBs instead of just two. This results in a lower limit of the maximum reachable frequencies on such kits.

Figure 1121 shows the supported memory speeds for 2nd generation AMD Ryzen desktop processors using different ranks and configurations of slots (available and used). The Figure is relevant for Gen2 (Zen+) Ryzen processors, so it is a bit out-of-dated. I couldn’t find any more recent information, and I would hope the information contained is still somewhat true for more recent Zen generations. As can be seen in Figure 11, the highest frequencies can be reached when using two-modules, with single-rank two-modules kits allowing for the fastest frequencies. Conversely, the worst supported speeds are to be expected for dual-rank four-module kit. In this scenario, both the memory controller is suffering from the increased scheduling overhead, and the four sticks make syncing higher frequencies harder.

Figure 11: Supported frequencies on 2nd Gen Ryzen processors for different memory configurations and ranks

Figure 11: Supported frequencies on 2nd Gen Ryzen processors for different memory configurations and ranks

Finally, in the previous section, we have also discussed the effect of memory-channel topology on the final memory-performance. Here, clearer conclusions can be drawn: mainly, Daisy Chain heavily favors two-modules configurations, whereas T-topology works best for four modules. My motherboard (the MSI MEG X570 Unify) uses a daisy chain topology, this heavily weights in favor of a two-modules kit. Combining this information with the other conclusions we have drawn so far in this section, the obvious choice to go with for my build is a two modules, dual-rank kit.

Final remarks: QVLs and physical clearance

A couple of final remarks deserve mentioning before concluding.

A very helpful resource I found during my research were QVLs or Qualified Vendor Lists, such lists are compiled and published by motherboard manufacturers, and contain a list of all memory kits that were tested to be fully functional with a specific motherboard model. These lists are a great resource to make sure that any memory kit model you are interested in has been previously tested, and is guaranteed to work out of the box with the model of motherboard you own. In my case I was able to find the following QVL for my MSI MEG X570 Unify motherboard.

Finally, in the previous installment of this series, I ended up choosing the Be quiet! Dark Rock Pro 4 CPU cooler. The massive size of the Dark Rock Pro 4, actually imposes a maximum height on my RAM modules of 40mm (46,8mm if no front-fan is used)22. As I want to make full use of my CPU cooler, this means I cannot use any module with a total height greater than the 40mm clearance available.

Final choice

It took a lot of words to get to this point. However, we now know exactly the specifications of the perfect memory kit for my build. To summarize the target specs are: 64GB 2-stick kit of Dual Rank memory at a frequency of 3200MHz to 3600MHz with a latency of 14 to 16, and a height no more than 40mm.

With that being said, my list of candidates quickly dwindled down to the following two finalists:

- Corsair VENGEANCE® LPX 64GB (2 x 32GB) Memory Kit: This kit boasts a frequency of 3200MHz at a CAS latency of 16 (full timings: 16-20-20-38), and a total module height of 31mm.

- Crucial Ballistix 64GB Kit (2 x 32GB) Memory Kit: With a frequency of 3600MHz, a CAS latency of 16 (full timings: 16-18-18-38) and a total module height of 39.17mm.

Out of the two, the clear winner performance-wise is the Crucial Ballistix kit. If that wasn’t enough, Crucial is a subsidiary of Micron, one of the only three memory manufacturers in the world, the other two being Samsung and Hynix23. This means that the company has a better control over the full manufacturing process of its memory (from the die all the way to the commercial kit). Under such a control, better quality is to be expected. However, such a quality comes at a hefty price. Whereas the Corsair kit comes at a price-tag of 220€ (at the time of my online lookup), the Crucial kit is 50% more expensive, at almost 330€. This difference in price was the only reason why I desisted on choosing the Crucial kit, and ended up going with the Corsair VENGEANCE® LPX 64GB (2 x 32GB) Memory Kit.

Conclusions

So far, this installment has been the most challenging to nail down. I have again barely scratched the surface of the topic, yet had a very hard time trying to mention just enough about the different memory aspects and parameters in order to construct a semi-cohesive picture about memory performance. Even though the topic is so rich, I have had a lot of trouble finding reliable information in some areas, and had to deal with a lot of contradicting information from different sources, which required a lot of going back and forth.

I have to admit that a lot of the information I mentioned here, I was only made aware of after the purchase of my memory module, and only when it was time to do this write up. But then again, this is the whole point of writing this stuff down. Having to explain everything, I ended up asking questions I had not thought of during my initial research. The main reason why I still ended up with the Corsair kit, even-though I did not have all the facts listed in the article in mind, goes back to the huge limitation that was the small clearance imposed by the my CPU cooler. As I have come to learn, it is not easy to find a 64GB two-stick memory kit with such a limited height. Had I known everything mentioned above, I think I would have gone with the more expensive Crucial modules. At 3600MHz, these modules truly operate in the sweet spot for Ryzen 5000, their timings are tighter than the corsair’s modules, and as mentioned the build quality is overall of a higher level. However, the Crucial kit leaves less than one millimeter of clearance to the cooler i am using, so I might have had some issues mounting it.

Finally, similarly to previous installments in this series, I was hoping to actually discuss multiple other components in this article. However, the discussion about RAM ended up taking longer than I expected. There aren’t many components left, so with a little luck I might be able to fit the remaining topics of discussion in one last article. I guess we will all find out then.

References

-

CGDirector: How Much RAM (Memory) Do You Need? Different Workloads explored ↩︎

-

CGDirector: MT/s vs MHz (Datarate vs Frequency) in RAM Modules ↩︎

-

Hardware Canucks: Choosing the best AMD Ryzen 5000 Memory – A Beginner’s Guide ↩︎ ↩︎2 ↩︎3

-

MSI Forums: RAM explained: Why two modules are better than four / single vs. dual-rank / stability testing (archived on 07.08.2022) ↩︎ ↩︎2 ↩︎3 ↩︎4

-

CGDirector: Guide to RAM (Memory) Latency – How important is it? ↩︎

-

CGDirector: Single Channel vs Dual Channel RAM [+Performance Compared] ↩︎

-

Wikipedia: DDR SDRAM#Double data rate (DDR) SDRAM specification ↩︎

-

Single Rank vs Dual Rank RAM: Differences & Performance Impact ↩︎ ↩︎2 ↩︎3

-

GamersNexus: RAM Performance Benchmark: Single-Channel vs. Dual-Channel - Does It Matter? ↩︎ ↩︎2

-

CGDirector: Why Samsung B-Die Is So Popular & Where To Find It ↩︎